PROGRESS 2050: Toward a prosperous future for all Australians

20/05/2022

While some of the technical elements in an ethical governance framework may seem daunting, the risk management and transparency benefits are clear.

At the heart of calls for ethical AI lies the very human emotion of fear. It is a fear that was neatly expressed in the ‘Paperclip Maximiser’ thought experiment by Swedish philosopher Nick Bostrom in 2003, where a hypothetical AI is directed to maximise the number of paperclips, while ultimately consuming all the resources in the world. The fear revealed in the experiment is that without sufficient controls, an AI may easily spin out of control and cause us unintentional but damaging harm.

This fear grows with the scale of the AI in question. A minor AI experiment may yield results that are ‘interesting’ but will never impact the public at large. An AI used in production systems, however, may make decisions at a speed that compounds the damage done, at a scale not possible if humans manually reviewed every decision. Maintaining the human-in-the-loop will be a necessary brake to manage risk in many AI scenarios.

While it is easy to perceive governance requirements as a burden, there are tangible benefits to be derived from an ethical framework beyond countering fear of AI. For example, where the fear of AI is driven by a fear of the unknown or biased treatment at the hands of a machine, ethical governance demands explainability, fairness and processes that will ultimately improve adoption, and therefore the value contributed by AI.

The critical aspects of an ethically effective framework for AI adoption may vary depending on the country or industry in question. There are, however, consistent principles that are summarised for Australian organisations by the Department for Industry, Science, Energy and Resources in the Australian AI Ethics framework. They cover principles such as being Reliable, ensuring Privacy and Security and providing processes for Contestability. They also cover issues classically in the domain of AI developers to address Fairness, Accountability, Transparency and Explainable (FATE). Areas that may be more challenging to address relate to managing issues where automated systems meet human interests, such as being for the benefit of the individual, society, and the environment.

There are benefits to be found at each stage of the ethical framework. The following are of greatest relevance to AI developers.

Fair – While there is social credit to be derived from a governance framework that ensures all groups are adequately catered to by an AI, there are also statistical benefits in addressing model bias. Unknown biases will result in unexpected treatments of potential customers or citizens and ultimately undermine the AI’s anticipated accuracy. Seeking out and correcting for biases will ultimately improve model accuracy.

Accountable – The AI process has many participants, so accountability is assigned to various parties at different stages in the AI’s lifecycle. Clearly established accountability accelerates problem resolution and underpins the smooth transition of a model through its development phases, as well as reducing the risk of system failure due to turnover of key personnel.

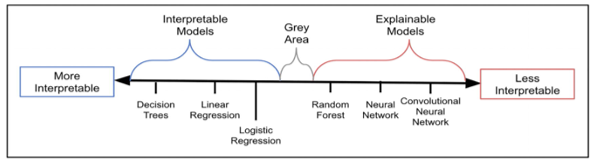

Explainable – Traditional statistical models have a reputation for being easily interpretable. More advanced techniques favoured in AI don’t necessarily provide such a straightforward interpretation. Applying techniques with cryptic names like as PD, ICE and LIME plots and Shapley values increase the chances of detecting unexpected behaviours that undermine a model’s longevity.

Transparent – Transparency relates to the broader set of business processes involving the model. Research has shown that the absence of trust is a significant inhibitor to the adoption of AI, with transparency a key counterpoint. To foster trust from employees and customers, the AI’s processes must be sufficiently transparent to show that the entire process is operating as intended.

Source: Interpreting Machine Learning Models: Learn Model Interpretability and Explainability Methods, by Anirban Nandi, Aditya Kumar Pal

Management of an AI’s reliability, safety, contestability, privacy and related security protocols are also considered top priorities in the ethical application of AI. These apply to most processes where decisions impact customers and are a subject for another day. The benefits of these practices have long been established but can be adapted to incorporate AI’s unique features.

For most countries, established regulatory frameworks still lags the adoption of AI in the private and public sector. This means the boundaries for ethical adoption of AI are being drawn by those with an interest in self-regulation in advance of any enforcement from authorities. This proactive approach will ensure these organisations retain their social license for the implementation of AI, where less responsible practitioners may find their progress impeded in the future by regulatory intervention to compensate for their own lack of appropriate governance.

Ultimately, progress in the face of fear of the unethical use of AI should be made through increased trust. Trust will be earned through the consistent and judicious application of the principles described above. This approach will ensure that AI-driven interactions are perceived as being to the benefit of customers and citizens. Without the investment in an ethical governance framework, the adoption of AI will unfortunately lag.