Explore our Climate and Energy Hub

AI is transforming our world

Artificial intelligence (AI) has the potential to unlock transformative economic, social and environmental opportunities for Australia. The potential for public benefit is significant, provided the development, adoption and use of AI is governed in a safe, responsible and sustainable manner. Governing AI in this way underpins community trust and stakeholder support and works to retain a social license. Importantly, good governance of AI also increases the likelihood that organisations will implement and scale up AI effectively and successfully. In other words, good governance creates a virtuous cycle whereby support for the widespread investment in and adoption of AI is maintained, and the transformative benefits of AI are more likely to be realised both at a business and societal level. As Australia seeks to keep pace with international competitors and leaders in the adoption and use of AI, it must also seek to be a global leader in responsible AI.

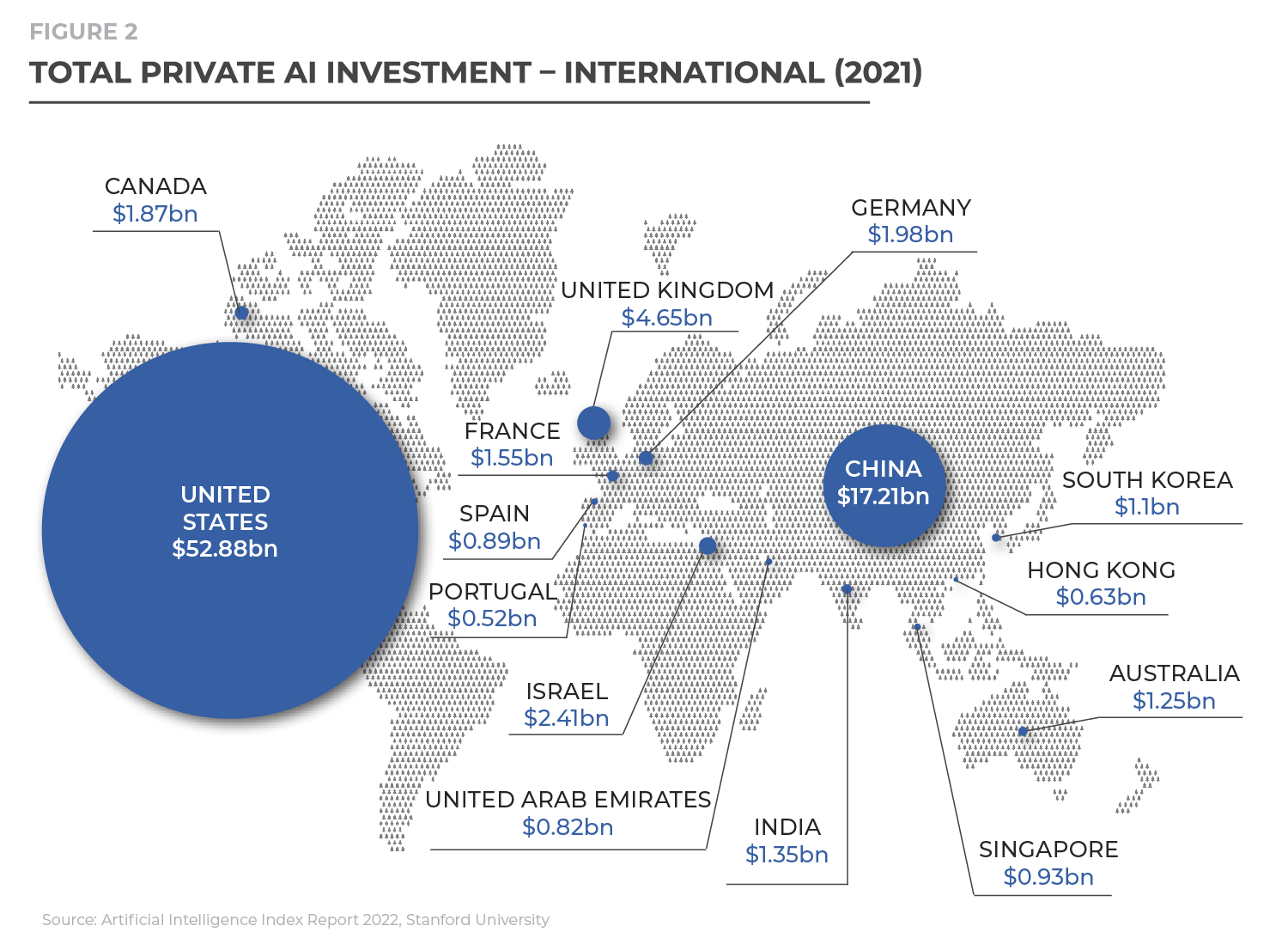

In Australia and other Western economies, the adoption of ethical AI principles has been an important feature of building robust AI governance practices. In 2019, the Australian Federal Government announced its voluntary Artificial Intelligence (AI) Ethics Framework to guide businesses and governments in designing, developing and implementing AI responsibly. A number of businesses tested these principles in a pilot project overseen by the Department of Industry, Innovation and Science (now the Department of Industry, Science and Resources). The pilot aimed to collate practical learnings and understand the challenges of implementing the principles, which included:

- Ensuring procured services and solutions are ethically responsible;

- The need to raise awareness of AI ethics, educate staff on the benefits of implementing AI ethics and improve training; and

- How to judge, measure and continually reassess principles that involve value judgements like ‘fairness’.

The release of these principles in Australia coincided with the peak hype in public discussion, development and adoption of AI ethical principles in industrialised Western economies in early 2019. However, nearly two years on from ‘peak principles’, many commentators were still lamenting the evidence of poor AI governance. This was a common theme in CEDA’s 2020 PubIic Interest Technology Forum.

Prompted by these observations, CEDA undertook a series of roundtable workshops with member organisations in late 2021 and early 2022 to gain insights on the progress towards and challenges of using responsible AI principles and practices within organisations.

This report summarises key insights from these workshops and is designed to highlight the practical progress and challenges of responsible AI implementation. It also seeks to identify where further support is needed to accelerate implementation.

From our roundtable discussions, it became clear that the level of AI maturity within Australian organisations remains low or evolving. Key challenges highlighted through the workshops included:

- ‘Siloed’ approaches to AI within organisations;

- The misunderstanding of responsible AI within organisations, including at the leadership level;

- That governance and ethical use of AI are largely deemed the province of tech teams;

- The difficulty of building an organisation-wide shared understanding and approach to aspects of responsible AI such as fairness and explainability;

- The lack of formal governance frameworks or frameworks that are divorced from broader risk and governance approaches; and

- How to effectively ensure that procured AI services and solutions are responsible.

Reflecting these challenges, one theme that emerged for those organisations at lower levels of AI maturity was a tendency towards less nuanced approaches in building business cases and risk management. These organisations tended to use a default ‘yes’ or ‘no’ approach to the consideration of AI. Those inclined to ‘yes’ were driven by a desire to ‘keep up’ with rapidly evolving trends and competitors, while potentially downplaying or misunderstanding risks and the need for risk mitigation. At the other end, those inclined to ‘no’ focused on identifying and avoiding all potential risks associated with AI, consequently downplaying or ignoring potential benefits and opportunities. Neither approach is likely to deliver the right long-term outcome for the business or its customers.

The siloed approach to AI and AI risk management within organisations increases the likelihood of governance and strategy shortcomings. The absence of a more multi-disciplinary approach connected to broader organisational practices and values increases the prospect of important risks being missed or underestimated and/or overconfidence in mitigating or managing those risks. In terms of business strategy, the absence of broader awareness and education in regard to AI capabilities and potential runs the risk of opportunities being overlooked.

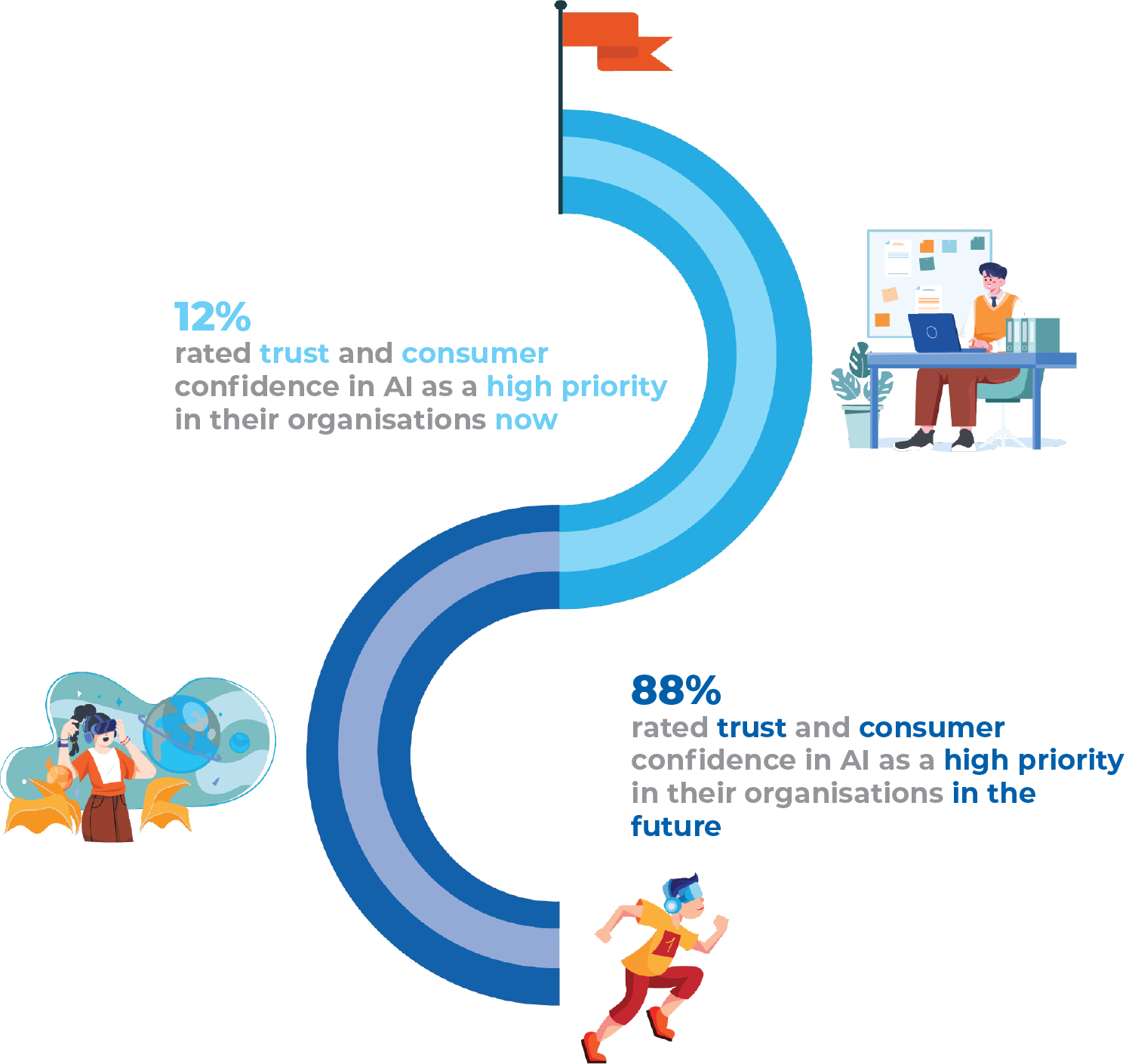

CEDA’s workshops also identified a significant disconnect in the prioritisation of trust and consumer confidence. While the vast majority of workshop participants (88 per cent) reported that trust and consumer confidence in AI is a high or very high priority for the future, only 12 per cent indicated this is a current priority or focus. The concern here is that practices developed today may not incorporate the governance foundations that enable safe, sustainable and responsible AI. If AI consumer confidence is not a current priority it won’t feature in corporate strategy considerations and important future opportunities may be lost. Retrofitting the right practices, processes, strategic thinking and culture is hard. More fundamentally, insufficient attention to these issues could result in bad outcomes in the early stages of AI implementation, which adversely impact confidence and trust and, in turn, AI opportunities now and into the future. More needs to be done to lift the priority and focus on building trust and confidence in AI.

The still-evolving AI governance practices and challenges identified by most workshop participants are broadly consistent with those identified in the 2019 Department of Industry, Innovation and Science pilots. This suggests a need to step up efforts to support the effective design, development and implementation of safe, sustainable and responsible AI.

- In response to this, CEDA will use its national convening power to:

- Educate on the benefits of responsible AI;

- Showcase best practice and the positive impacts of responsible AI; and

- Share practical learnings and case studies.

In doing so, CEDA will seek to work collaboratively with other key stakeholders such as government, business and academia to magnify the impact and reach of this work and to identify further initiatives critical to Australia becoming a global leader in responsible AI.

There is a clear appetite among CEDA members to understand what responsible AI means in practice for their organisations, and how to build and maintain capabilities, robust systems and frameworks as a basis for trust and confidence in the design and use of AI. CEDA will build and leverage member engagement to share expertise and learnings focused on:

- Elevating the immediate priority of consumer trust and confidence as a way of driving a greater focus on the governance of AI more broadly;

- Enabling shared understanding of responsible principles for AI, what these mean in practice, and how to measure and report against them;

- Building multi-disciplinary whole-of-organisation approaches to the oversight and governance of AI; and

- Promoting the importance of building AI competencies and literacy, reflecting the ubiquity of digitisation, data collection and use.

In the absence of better AI business and governance practices, AI adoption will continue to lag in Australian organisations with long-term consequences for innovation, productivity and international competitiveness. This underscores the importance of learning from the challenges of implementing responsible AI and the need to accelerate efforts to improve education, understanding and the promulgation of best practice.

Artificial intelligence (AI) is taking the world by storm and increasingly shaping economic and broader opportunities. It has become clear that AI has enormous potential to advance economic and societal wellbeing and enable improved environmental outcomes.

AI is shaping our future

Artificial intelligence (AI) is taking the world by storm and increasingly shaping economic and broader opportunities. It has become clear that AI has enormous potential to advance economic and societal wellbeing and enable improved environmental outcomes.

AI is already enabling better products and services to be delivered faster, cheaper and more safely across most industries and sectors. For consumers, AI applications are already a part of our day-to-day lives and customer experiences.

To put the scale of potential economic transformation into perspective, Accenture analysed 12 developed economies and found that AI has the potential to double their annual economic growth rates and boost labour productivity by up to 40 per cent by 2035.

AI is a collection of interrelated technologies used to solve problems autonomously and perform tasks to achieve defined objectives without explicit guidance from a human being. These technologies include machine learning, natural language processing, virtual assistants, robotic process automation, unique identity, video analytics and more.

AI has the potential to transform economies, unlock new societal and environmental value and accelerate scientific discovery.

- Dr Larry Marshall

Chief Executive, CSIRO

AI in Australia

The potential benefits to Australia are similarly transformative, particularly for an economy looking to reignite productivity, increase competitive advantage and raise living standards. AlphaBeta in 2018 estimated that better utilising digital technologies – including AI – would be worth $315 billion to the Australian economy by 2028.

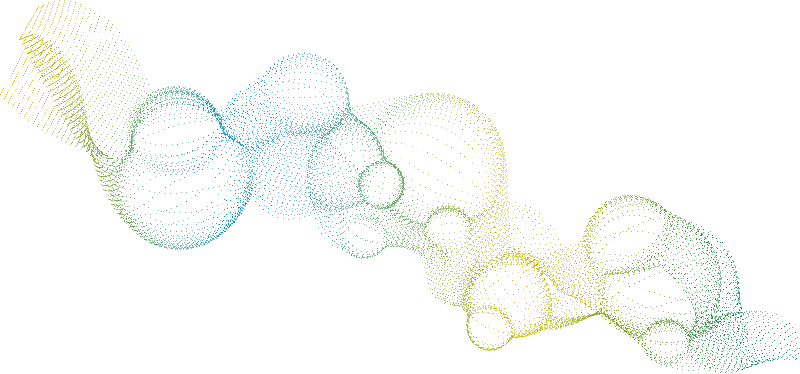

However, AI is still in the early stages of implementation in many Australian organisations and industries – only 34 per cent of firms use AI across their operations, and 31 per cent use it within a limited part of their business. Private investment in AI in Australia has accelerated rapidly in recent years, but still sits well below that of other countries that we consider both as competitors and also potential future partners.

Countries above us in the AI investment stakes are also, not surprisingly, ahead of us in terms of the sophistication of AI use. A 2018 global survey found that 55 per cent of respondents from China used AI to widen a lead over the competition, while almost the same percentage from Australia (50 per cent) saw AI as a way to catch up or stay on par with competitors.

The AI race has only accelerated since then, with a 2021 PwC global survey reporting a huge lift in the uptake of AI. Only seven per cent of respondents reported they were not using AI in some capacity.

Given the scope for AI to unlock new opportunities and possibilities, this is a race in which Australia needs to be well positioned. CEDA’s focus is on how to accelerate the development, adoption and use of AI in Australia in a way that is safe, sustainable and responsible.

Responsible & trustworthy AI enables acceleration

The more that employees, customers, investors, government and the community have confidence and trust that AI is being used in a safe, sustainable and responsible way and in the interest of the public broadly, the greater the support for its adoption and use.

Building trust and confidence in the use of AI requires leadership, transparency and clarity around guiding values or principles, accountabilities, roles and responsibilities. In other words, good governance. Good governance of AI, in turn enables organisations to achieve better AI outcomes.

Accenture research shows that companies that scale AI successfully understand and implement responsible AI at 1.7 times the rate of their counterparts. Similarly, CISCO research finds that every US$1 invested in data privacy measures enables US$2.70 of benefits to be leveraged by businesses. While a survey conducted by the Economist Intelligence Unit reports that 80 per cent of business respondents believe ethical AI is critically important to attracting and retaining talent.

Good governance of AI to ensure safe, sustainable and responsible adoption underpins community and stakeholder support and increases the likelihood that organisations will implement and scale up AI effectively and successfully. Together this creates a virtuous cycle whereby support for the widespread investment in and adoption of AI is maintained, and the transformative benefits of AI, including through higher productivity and economic growth, are more likely to be realised. CEDA members are motivated by the potential of igniting such an AI virtuous cycle in Australia.

AI governance, trust & confidence in practice – more attention needed

Currently, levels of trust in AI are generally low but gaining more attention. One global study found that two-thirds (68 per cent) of respondents trusted a human more than AI to decide bank loans and only 9 per cent felt very comfortable with businesses using AI to interact with them.

In a 2021 IBM global study, 40 per cent of consumers were found to trust companies to be responsible and ethical in developing and implementing new technologies, with little improvement in trust since 2018. However, one promising sign was that the number of executives ranking AI ethics as important increased – rising from less than 50 per cent in 2018 to nearly 75 per cent in 2021. Growing awareness is promising; however, the caveat is that fewer than 20 per cent of executives strongly agreed that their AI ethics actions met or exceeded their stated principles and values.

Similarly, the public is not aware of the use of AI in important areas. Research by the Australian Human Rights Commission found that 46 per cent of Australians were not aware that the government makes important decisions about them using AI. Recent investigations by the Office of the Australian Information Commissioner have found that organisations are collecting facial images and biometric information. With this type of collection covered under privacy rules and a Privacy Act Review currently ongoing, it is clear there is uncertainty around how biometric information is collected digitally and ultimately used in Australia. The risk is that potential misuse could undermine consumer trust in emerging technologies more broadly.

It is surprisingly easy to break the law when it comes to AI.

Principles approach to responsible AI

An important starting point for the development of responsible AI governance can be the adoption of overarching principles to guide adoption and use. Across Western democratic countries, including Australia, the public discussion, development and adoption of AI ethical principles reached a peak around early 2019. This coincides with the Australian Federal Government announcing its voluntary AI ethics framework in 2019.

However, if trust is to be earned, these principles must be implemented effectively and consistently in day-to-day practice, and the results of these efforts reported openly and clearly. In the absence of effective implementation and reporting, ambitious principles may work against building trust by creating false or unreasonable expectations around behaviours that are ultimately not lived up to.

At CEDA’s 2020 CEDA Public Interest Technology Forum, one experienced AI professional remarked “AI today is like finance in the 1980s, operating as if it was the wild west” and another noted that “it is surprisingly easy to break the law when it comes to AI”.

While these observations were made broadly and not necessarily just in an Australian context, this state of affairs is concerning in the context of retaining support for the beneficial adoption and use of AI. These reflections prompted CEDA to explore the state of play regarding the implementation of ethical AI principles in Australian organisations.

.png)

AI Principles to Practice

DownloadCEDA undertook a series of roundtable workshops in late 2021 and early 2022 to gain qualitative insights on the progress towards, and challenges of, embedding and operationalising responsible AI principles and practices in Australian organisations. These insights are intended to complement broader work and surveys undertaken by others, including:

- AI Ethics in Action - An enterprise guide to progressing trustworthy AI (IBM, 2022)

- Responsible AI – Maturing from theory to practice (PwC, 2021)

- Responsible AI Index 2021 (Fifth Quadrant, Ethical Advisory, Gradient Institute, 2021).

The focus of discussions was to unpack how trust and consumer confidence in AI are being prioritised within organisations, and how ethical principles are being implemented in support of AI-driven business outcomes. Feedback was both verbal and written, with facilitators and participants sharing information online in real-time. There were also a number of participant polls focusing on trust, confidence, understanding across business units, and helpful resources and frameworks.

The findings in this report do not proport to be a comprehensive or statistical representation of the state of play. They are intended to reflect the scope of progress and to highlight challenges, and in doing so, spark conversation and discussion around how progress against responsible AI principles can be enabled and accelerated.

Roundtables included participants from sectors and organisations that are mature in their approach to AI – service providers and or sectors most advanced in use and deployment of AI (financial services and communications) – and sectors that are in the initiating and developing stages of AI maturity. It is important to note that by virtue of participating in the workshops, participants are likely to represent organisations more engaged on the issues of responsible AI (i.e. positive self-selection).

Australia’s eight Artificial Intelligence Ethics Principles were released in June 2021 and designed to ensure AI is safe, secure and reliable. The Department of Industry, Science, Energy and Resources introduced this voluntary framework to support Australia becoming a global leader in responsible and inclusive AI.

FIGURE 4 – Australia’s AI Ethics Principles

A voluntary framework

The principles are voluntary. We intend them to be aspirational and complement – not substitute –existing AI regulations and practices.

By applying the principles and committing to ethical AI practices, you can:

- build public trust in your product or organisation

- drive consumer loyalty in your AI-enabled services

- positively influence outcomes from AI

- ensure all Australians benefit from this transformative technology.

Responsible AI state of play in Australia

There was strong CEDA member interest in participating in our responsible AI workshops across a broad range of sectors and active engagement in the workshops themselves.

The nature and tone of discussions throughout the workshops highlighted a keen interest in openly sharing information and progress, leaning into challenges and increasing knowledge and awareness of best practice. This was driven by a desire to improve their own processes and outcomes, and in recognition of the broader benefit of retaining community support for AI.

Limited AI maturity

The level of AI maturity reported by workshop participants was consistent with the maturity curves reported in other larger sample surveys. Specifically, when asked to reflect on how advanced the adoption of AI was within their organisations, 22 per cent reported limited, 69 per cent indicated it was developing and eight per cent mature. This is almost identical to the results reported by the Responsible AI Index.

Across our workshops, there was a great awareness of the potential opportunities presented by AI and a strong desire to be utilising AI practices effectively to improve customer service, enhance organisational decision-making and efficiency, and to gain market advantage.

There was also a clear recognition of the importance of trust and confidence in AI, with nearly 90 per cent of workshop respondents indicating that trust and consumer confidence in AI in the future was a high or very high priority (i.e., rated 4 or 5 on a 1 to 5 scale of priority – see Figure 8).

Regulated elements of responsible AI getting attention

According to participants, the aspects of responsible AI implementation that are attracting the greatest attention within their organisations are also attracting significant government and public scrutiny – specifically privacy and cyber security. This is particularly the case for organisations that are at relatively less mature stages of AI adoption and use.

Broadly, there was a higher degree of confidence and clarity expressed by workshop participants in understanding regulatory and stakeholder expectations, how to discuss and communicate these issues, the practical policies and processes related to cyber and privacy, and the consequences of failing to meet expectations and regulatory requirements.

State of play – challenges & risks

Workshop participants clearly indicated a strong interest in and focus on the positive potential of AI in their organisations. However, the roundtable discussions drew out the considerable challenges that many organisations are encountering in their approaches to adopting AI, how they are evaluating and balancing benefits and risks, and understanding and delivering against expectations regarding the responsible use of data and AI.

Default ‘yes’ or ‘no’ to AI

One theme that emerged across the workshops for those less advanced in the use of AI was a tendency towards polarised approaches to considering potential risks, the management of these risks and broader expectations. Put simply, a tendency towards default ‘yes’ or ‘no’ approaches emerged in the workshops, particularly among the relatively less mature organisations. The following participant observations are illustrative of the risk-accepting side:

"Our leaders love it (AI) without necessarily understanding it, which is creating pressure on people to operationalise AI at pace."

"People on the ground are running too fast – from one deadline to the next not linked to risk management or governance."

And, on the risk-averse side:

"Given the complexity and perceived risks, it can be hard to get senior sponsorship."

Effective management of both positive and negative risk requires structured and nuanced processes to guide the consistent assessment of potential benefits and adverse impacts and the management of both. Organisations that reported a high level of AI maturity also presented more nuanced approaches to the consideration of AI opportunities and risks and approaches that were more closely aligned to organisation-wide values and risk management frameworks.

Devil in the principles detail

A critical factor in effective AI risk identification and management within organisations is the ability to reach broadly shared understandings of the nature and likelihood of both the potential risks and opportunities. Building a shared understanding of expectations related to responsible AI and the principles underpinning this are especially important.

Not surprisingly, workshop participants reported grappling with building a shared understanding and communicating the more subjective aspects of responsible AI use. This was particularly so for principles relating to fundamental human rights such as fairness, inclusion and equity, which can mean different things to different people. Participants noted that while high-level principles are easy to agree on, many organisations do not have the tools or internal capabilities to enable constructive discussion and resolution of what principles and concepts mean in the context of daily business decisions and potential trade-offs.

Similarly, while understanding the importance of transparency, explainability, and contestability in enabling AI adoption and use, participants highlighted the challenges of delivering against these objectives in practice. Organisations are grappling with how much information to make available; how to communicate complexity in plain language; how to give the right prominence to commitments and progress; and how to account for the differing appetites and requirements for information from both internal and external stakeholders. Participants recognised, for example, the practical effect of overly detailed and complex terms and conditions for products and services, which has effectively rendered them useless for consumers.

AI governance and understanding is siloed

While most participants identified that their organisations had broad and robust risk identification and management frameworks, concerns were raised about how, and the extent to which, AI-specific risks are identified, captured and managed within these broader frameworks. The absence of formal frameworks and approaches to AI governance, including a formal consideration of ethical/responsible issues in their organisations, was called out by some participants.

Participants widely identified that accountability and responsibility for AI governance currently resided largely or solely in their organisations’ tech and data teams. This siloed approach places a significant burden on technology teams and raises questions regarding how well connected ‘experts’ are to the concerns and evolving expectations of customers and broader stakeholders. Relying on siloed AI governance increases the potential for inconsistent application of broader risk frameworks and disciplines across business units and products/services. It also runs the risk of issues being missed if experts are not always ‘in the room’. One participant noted: “we are very siloed, [with] pockets of activity, understanding and awareness of AI, [we] need to draw that together”.

These governance challenges are exacerbated when there is no broad understanding of, and action towards, responsible AI practices and expectations across organisations. Insights from our workshops suggest this is a challenge for many organisations.

Participants were polled during the workshops on the understanding of responsible AI practices and their application within their organisations. Poll results showed that one-third of participants believe that business leaders in their organisations misunderstood AI practices, while one-quarter believe that their risk and compliance teams misunderstood AI practices (Figure 7). Even where responsible AI approaches are understood, it is largely the tech and data teams that are seen as acting on them. However, globally there are signs that this perception is shifting. An IBM survey found business leaders are taking more accountability for AI ethics in their operations, increasing from 15 per cent in 2018 to 80 per cent in 2021.

SAS research on accelerated digital transformation, which has strong parallels to AI transformation, reported significant challenges that can be linked to siloed expertise and governance. These included: having to explain data science to others; the inability to integrate findings into the organisation’s decision-making processes; and maintaining responsible expectations about the potential impact of data science.

Participants from self-disclosed mature AI organisations revealed more nuanced approaches to risk identification and management centred around broader organisational processes and governance, with AI controls and processes strongly linked to all-of-organisation values and strategy. However, these organisations were in the minority.

Training optional

Interestingly, a number of participants noted that while general governance and compliance training, including data and privacy training, is compulsory for all employees – AI-related compliance training is voluntary or targeted towards technology and data team users. Organisations need to broaden education and training and build multi-disciplinary, organisation-wide capability and understanding of responsible AI issues and practices.

Responsible procurement – work to be done

With many organisations in Australia procuring AI services and systems from third-party providers, one critical governance challenge is the ability to assess the responsibility of services and suppliers. Participants observed that their organisations are struggling with the procurement process and how to assess the integrity of providers and the effectiveness of approaches to responsible AI in their services and systems.

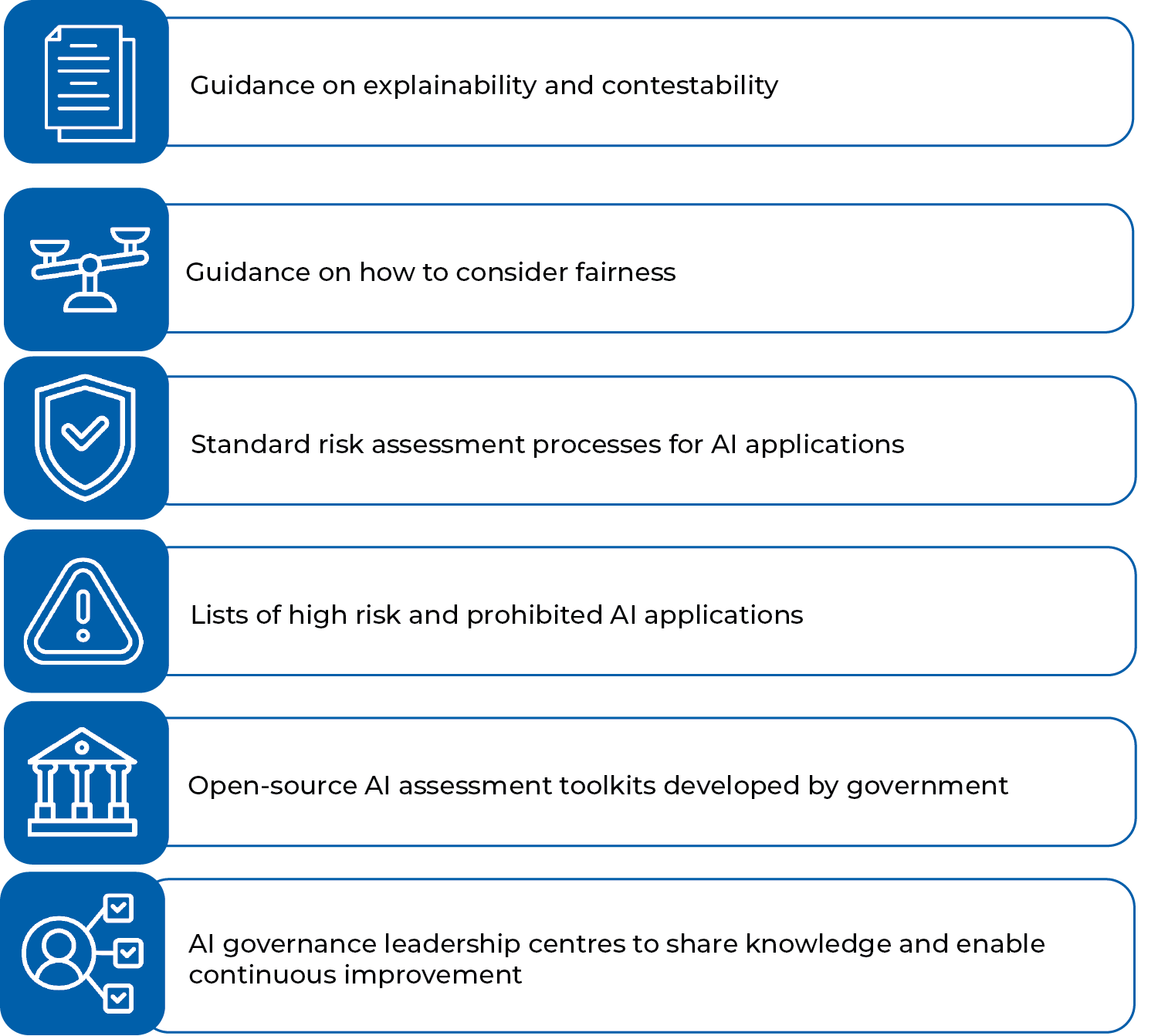

Looking for guidance

Against the backdrop of the challenges summarised above, participants expressed interest in support and direction from regulators and experts on AI principles, guidance and toolkits. The most helpful, according to preferences revealed through workshop polling, would be:

A disconnect between trust and confidence

While nearly 90 per cent identified trust and consumer confidence in AI as being a high or very high priority for the future, only 12 per cent of workshop respondents identified trust and confidence in AI as a priority in their organisations right now.

The immediacy or primacy of commercial drivers of AI adoption may be one factor at play and is consistent with the pressure noted above for businesses to “get on with it”. As one participant noted: “early discussions on AI governance and corporate responsibility were put on hold in response to corporate activity”. Another related factor may be limited organisational capability and capacity. One participant in their comments reflected on: “limited discussions on trust and confidence… expecting more of a focus as we build our internal capability”.

Whatever the cause, building AI processes and approaches without prioritising trust and confidence runs the very real risk that organisations entrench poor techniques, processes and outcomes and fail to meet community expectations. This risks losing AI social license, which has broader consequences. Participants readily acknowledge that evidence and exposure of poor outcomes and practices in one organisation can tarnish a sector and/or AI more broadly. Even if that risk does not eventuate, it is much harder to retrofit a culture of responsibility and associated processes down the track.

Next steps

The widespread interest in and adoption of ethical or responsible principles of AI confirms a high level of understanding that AI can deliver significant benefits if its development, adoption and use is grounded in sound governance. This must be centred on the wellbeing and interests of people and community and their trust and confidence that AI is beneficial to them.

While acknowledging the positive engagement of many organisations, insights from our workshops indicate there is still a way to go to effectively and consistently implement responsible AI practices. The still-evolving AI governance practices and challenges identified are broadly consistent with those identified in the 2019 Department of Industry, Innovation and Science pilots.

Lead with intent

At a national level, there is an opportunity to build a stronger narrative around the positive role that AI can play in driving better economic, social and environmental outcomes, and our ability to develop, adopt and use AI in a safe, responsible and sustainable way. In other words, to become a leading, responsible AI nation.

There is enormous potential to use AI to lift business performance, productivity and economic growth; improve government services delivery and social inclusion; and enhance environmental outcomes. Putting the responsible AI principles of public interest, benefit and trust at the core of our national aspirations would send a clear signal to business and other stakeholders on the importance of this in accelerating a virtuous AI investment cycle in Australia.

CEDA will use its content platforms and events programming to drive public discussion and awareness of the transformative opportunities of accelerated responsible AI.

AI for good

As part of its newly established ESG (Environmental, Social and Governance) Community of Practice, CEDA will explore and share the ways in which AI (and technology more broadly) can be used to advance progress against Australia’s Sustainable Development Goals (SDGs). Many of Australia’s SDGs need improvements or significant breakthroughs if they are to be achieved or, in some cases, to start heading in the right direction. Focusing attention on the transformative benefits through the SDGs will contribute to building awareness of the potential public interest benefits of accelerated AI investment.

Build responsible AI maturity & capability across the CEDA membership

There is a clear appetite among CEDA members to understand what responsible AI means in practice, and how to build capabilities, robust systems and frameworks to in turn increase trust and confidence in AI internally and externally.

CEDA will use its cross-sectoral convening power to establish an ongoing member forum for sharing expertise and learnings focused on:

- Elevating the immediate priority of consumer trust and confidence as a way of driving greater focus on the governance of AI more broadly;

- Enabling shared understanding of the key responsible principles for AI, what these mean in practice and how to measure and report against them;

- Building multi-disciplinary whole-of-organisation approaches to the oversight and governance of AI; and

- Promote the importance of AI competencies and literacy and the importance of building this more broadly across organisations, reflecting the ubiquity of digitisation and data collection and use.

Practice & policy learnings

Throughout our engagement with members and stakeholders, CEDA will reflect on revealed challenges and insights, how these apply beyond our members and the potential policy implications.

We will share these insights, including with educators and policymakers, as appropriate, with a view to creating the right institutional arrangements, policies, competencies and culture to drive positive AI opportunities and outcomes more broadly in Australia.

.png)

AI Principles to Practice

DOWNLOADCEDA PIT (Public Interest Technology Forum) 2020. Retrieved 2022, from https://www.ceda.com.au/NewsAndResources/VideosAndPhotos/Technology-Innovation/PIT-Forum-2020

Accenture. (2018). Realising the Economic and Societal Potential of Responsible AI. Retrieved 2022, from https://www.accenture.com/_acnmedia/pdf-74/accenture-realising-economic-societal-potential-responsible-ai-europe.pdf

Accenture. (2017). Why Artificial Intelligence is the Future of Growth. Retrieved 2022, from https://www.accenture.com/ca-en/insight-artificial-intelligence-future-growth-canada

AlphaBeta. (2018). Digital innovation: Australia’s $315B opportunity. Sydney: AlphaBeta

Fifth Quadrant, Ethical Adivsory, Gradient Institute. (2021). The Responsible AI Index. Fifth Quadrant. Retrieved 2022, from https://www.fifthquadrant.com.au/2021-responsible-ai-index

Deliotte. (2019). State of AI in the Enterprise, 2nd Edition survey. Deloitte the Center for Technology, Media & Telecommunications. Retrieved 2022, from https://www2.deloitte.com/content/dam/insights/us/articles/4780_State-of-AI-in-the-enterprise/DI_State-of-AI-in-the-enterprise-2nd-ed.pdf

PwC. (2021). AI Predictions 2021. London: Price Waterhouse Coopers. Retrieved 2022, from https://www.pwc.com/us/en/tech-effect/ai-analytics/ai-predictions.html

Accenture (2020) Getting AI results by "going pro" https://www.accenture.com/au-en/insights/applied-intelligence/professionalization-ai

CISCO. (2020). The 2020 Data Privacy Benchmark Study. Retrieved 2022, from https://newsroom.cisco.com/c/r/newsroom/en/us/a/y2020/m01/cisco-2020-data-privacy-benchmark-study-confirms-positive-financial-benefits-of-strong-corporate-data-privacy-practices.html

Economist Intelligence Unit. (2020). Staying ahead of the curve – The business case for responsible AI. Retrieved 2022, from https://www.eiu.com/n/staying-ahead-of-the-curve-the-business-case-for-responsible-ai/

PEGA. (July 2019). AI and empathy: Combining artificial intellgience with human ethics for better engagement. Pegasystems. Retrieved 2022, from https://www.pega.com/insights/resources/ai-and-empathy-combining-artificial-intelligence-human-ethics-better-engagement

IBM. (2022). AI ethics in action. IBM Institute for Business Value. Retrieved 2022, from https://www.ibm.com/thought-leadership/institute-business-value/report/ai-ethics-in-action

Australian Human Rights Commission. (2020). Human Rights and Technology project. Australian Government. Retrieved 2022, from https://humanrights.gov.au/our-work/rights-and-freedoms/projects/human-rights-and-technology

Richardson, M., Andrejev, M., & Goldenfein, J. (2020, June 22). Clearview AI facial recognition case highlights need for clarity on law. Retrieved from Choice (Op-ed): https://www.choice.com.au/consumers-and-data/protecting-your-data/data-laws-and-regulation/articles/clearview-ai-and-privacy-law

Google Trends. (2022). AI ethics. Retrieved from Google Trends: https://trends.google.com.au/trends/explore?date=today%205-y&q=ai%20ethics

Fifth Quadrant, Ethical Adivsory, Gradient Institute. (2021). The Responsible AI Index. Fifth Quadrant. Retrieved 2022, from https://www.fifthquadrant.com.au/2021-responsible-ai-index

Department of Industry, Science, Energy and Resources. (n.d.). Australia’s Artificial Intelligence Ethics Framework. Retrieved from https://www.industry.gov.au/data-and-publications/australias-artificial-intelligence-ethics-framework

Fifth Quadrant, Ethical Advisory, Gradient Institute. (2021). The Responsible AI Index. Fifth Quadrant. Retrieved 2022, from https://www.fifthquadrant.com.au/2021-responsible-ai-index

IBM. (2022). AI ethics in action. IBM Institute for Business Value. Retrieved 2022, from https://www.ibm.com/thought-leadership/institute-business-value/report/ai-ethics-in-action

SAS. (2022). Accelerated digital transformation: Research, perspectives and guidance from data scientists and thought leaders. Retrieved from https://www.sas.com/content/dam/SAS/documents/marketing-whitepapers-ebooks/ebooks/en/accelerated-digital-transformation-datascientists-112560.pdf

Department of Industry, Science, Energy and Resources. (n.d.). Australia’s Artificial Intelligence Ethics Framework - Testing the AI Ethics Principles. Retrieved 2022, from https://www.industry.gov.au/data-and-publications/australias-artificial-intelligence-ethics-framework/testing-the-ai-ethics-principles

The findings presented in this report are based on a series of facilitated workshop discussions with key stakeholders and CEDA members. The workshops were designed and delivered by CEDA member Portable, an organisation that uses research, design and technology for positive impact and transformational change. Portable’s support for our work is greatly appreciated, with special thanks to Simon Goodrich, Sarah Kaur, and Aishling Costello from the Portable team for their efforts, insights and enthusiasm which were critical to the success of the workshops.

Thanks also to Dr Catriona Wallace and Dr Tiberio Caetano from the Gradient Institute and Dr Rahil Garnavi from IBM for providing expert introductions to the workshops.

Conclusions and insights drawn from the workshops are the responsibility of CEDA.

This report has been prepared with the support of CEDA’s Public Interest Technology founding partners Google and IBM.

.png)